Simulation

Part of the fun working in the lidar space is that it opens up many adjacent projects, and as part of our ongoing perception work, we are also creating tools for simulation and data collection.

Consider all of these simulations to be unofficial, and please refer to the company webpage for official updates!

Update March 19

Happy first update!

Let's take a general look at the simulation project. We break it down into 3 main components.

- A content server - one of the most tedious parts of setting up a project like this is organizing all of our assets, especially when multiple parts of the project rely on knowing information about the assets. To make this easier, we have a server that scrapes our filesystem for the available assets, and exposes them through a simple RESTful endpoint.

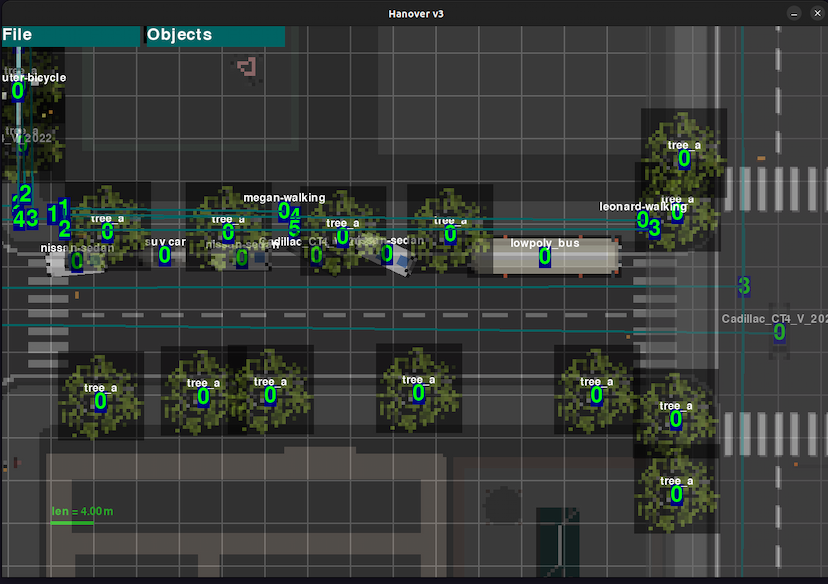

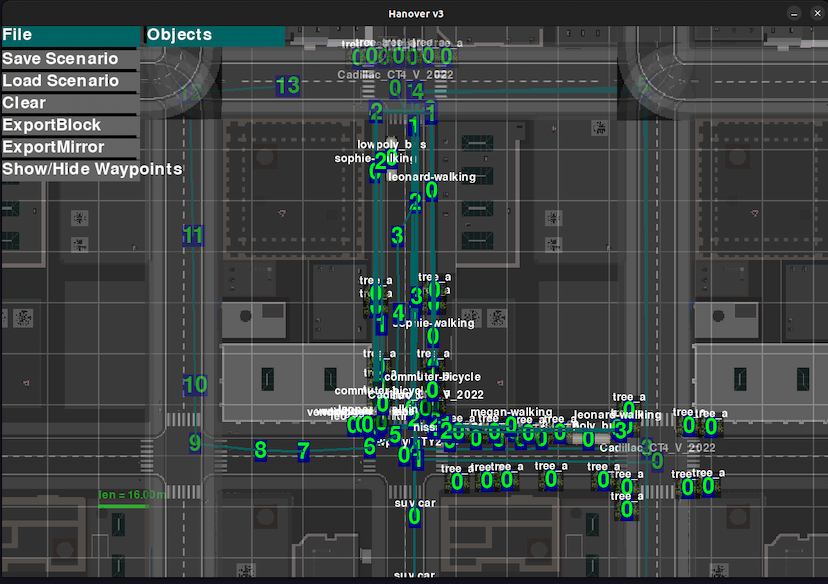

- The scene editor UI - a very core part of the project is having some way to quickly create and edit scenes. For now, pygame is good enough for this purpose! Our editor is just a top-down view of the world, and from here we can add and remove objects, define object paths to follow, and save scenes for later use.

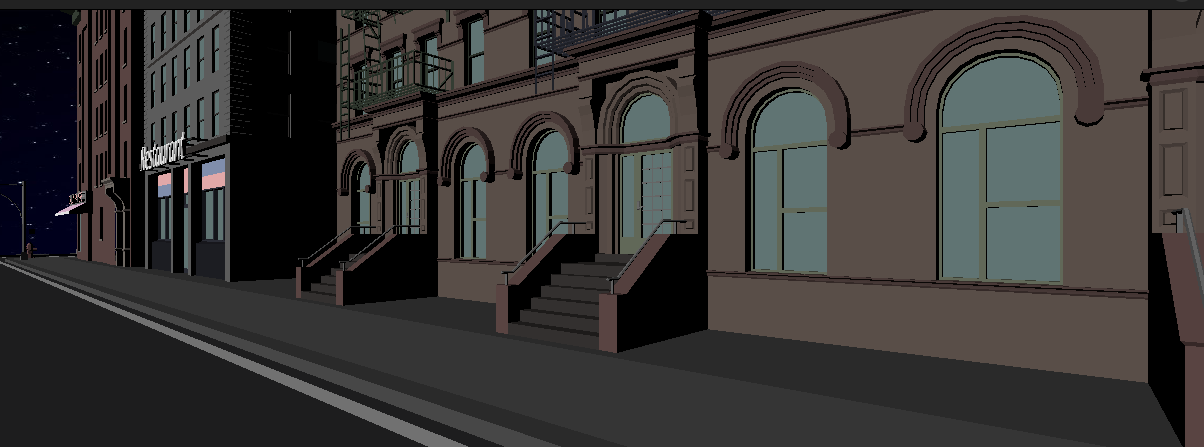

- The simulator - this is the real body of the project. The simulator loads scenes defined in the editor UI, and receives assets from our content server. From there, it functions essentially like a video game engine, rendering the scene we're using.

Yes But! How do we get the point cloud?

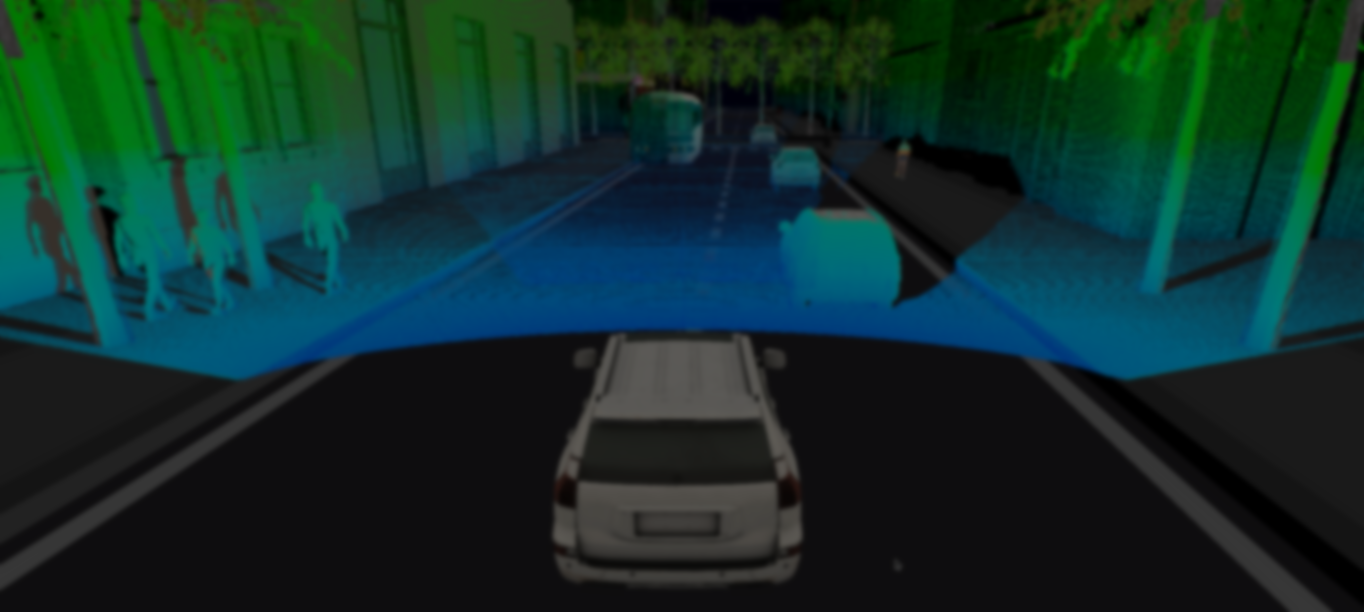

This part is fun - because we want the simulation to run in realtime, obviously we need this to run on GPU. So we're going to flex the power of our shaders. We create a second camera, positioned on the roof of the car you're seeing below, just above the windshield.

We attach a texture to render things to, but instead of writing colors, we're going to write the real-world position of each fragment! Because the fragment shader interpolates between vertices, by feeding the vertex positions into the fragment shader, we can get the world coordinate of every pixel you're seeing on the screen.

We then generate the firing angles of our lidar (in image coordinates), and map these on to the world-position texture in order to tell which positions are hit by our scan.

At this point, we have everything we need, so the final step is to render everything - point cloud and objects, into a final display you're seeing below.

Dev Links

The simulator and content server are written in rust. Having rust's compile-time checking speeds up development a ton, and even more so with a graphics project like this.

All rendering is done with wgpu. It's an awesome graphics API and I'd highly recommend trying it out.