Collision Detection

Introduction

In the development of StudioViz, a simulator created by Cepton’s in-house software engineers, we aim to implement a feature for detecting collisions between objects. This feature, known as Collision Detection, addresses the computational problem of identifying when two or more spatial objects intersect. It is a critical component in computer graphics, simulation, and various other applications where accurate spatial interactions between objects are necessary.

In the realm of games and simulation engines, collision detection plays a crucial role in ensuring realistic interactions between objects and their environment. This process involves calculating whether and when objects intersect, providing essential feedback for simulating real-world physics and behaviors. By accurately detecting collisions, developers can create environments where objects respond naturally to impacts, enhancing the realism and immersion of the simulation. While this doesn't involve direct obstacle avoidance, it establishes a foundational framework for evaluating collision scenarios during development and testing. This capability is particularly valuable for refining perception algorithms, ultimately contributing to the broader vision of safe and autonomous transportation by ensuring that simulated models behave as expected in various scenarios.

To achieve collision detection, various methods such as bounding volume hierarchies, spatial partitioning, and proximity queries may be employed. These techniques aim to minimize computational overhead while maintaining high accuracy in detecting collisions. The implementation of Collision Detection feature in StudioViz enhances the simulator's capabilities, enabling more realistic and interactive simulations for users. In this blog post, we will cover the algorithm behind collision detection and demonstrate various scenarios that simulate real-world car collisions using Pygame, a comprehensive set of Python modules designed for writing video games.

Collision Detection Algorithm Explanation

When it comes to collision detection for cars, several methods are available, each with distinct advantages and disadvantages. These methods include Axis-Aligned Bounding Box (AABB), Oriented Bounding Box (OBB), and Separating Axis Theorem (SAT). Here is a detailed examination of each method:

| Methods | Pros | Cons |

|---|---|---|

| AABB | Computationally inexpensive Easy implementation |

Inaccurate for objects that are not axis-aligned |

| OBB | Better accuracy and versatility | More complex implementation |

| SAT | Even higher accuracy and versatility | Most complex implementation |

We have ultimately selected the Separating Axis Theorem (SAT) for car collision detection due to its superior accuracy, despite its complex implementation. When dealing with lidar systems, it is crucial to ensure that collisions are detected with the highest precision. SAT provides the necessary level of accuracy, making it the most suitable choice for our application.

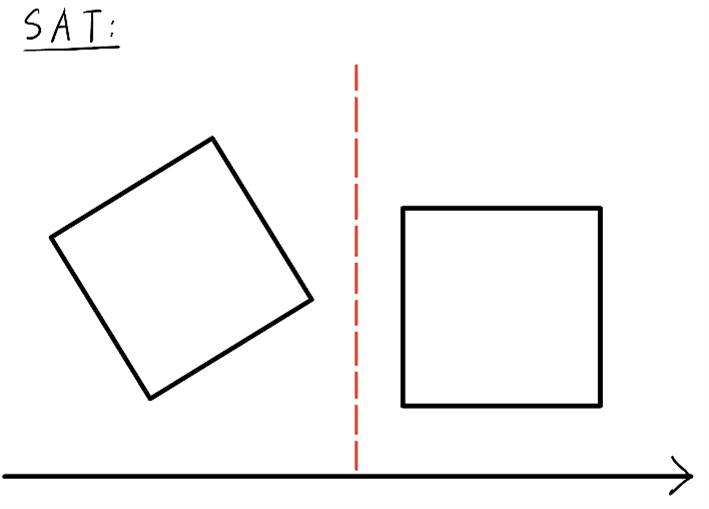

SAT is quite simple in concept. Basically, if you are able to draw at least one straight line between two objects, then those two objects are not colliding. If you are unable to, then they are colliding.

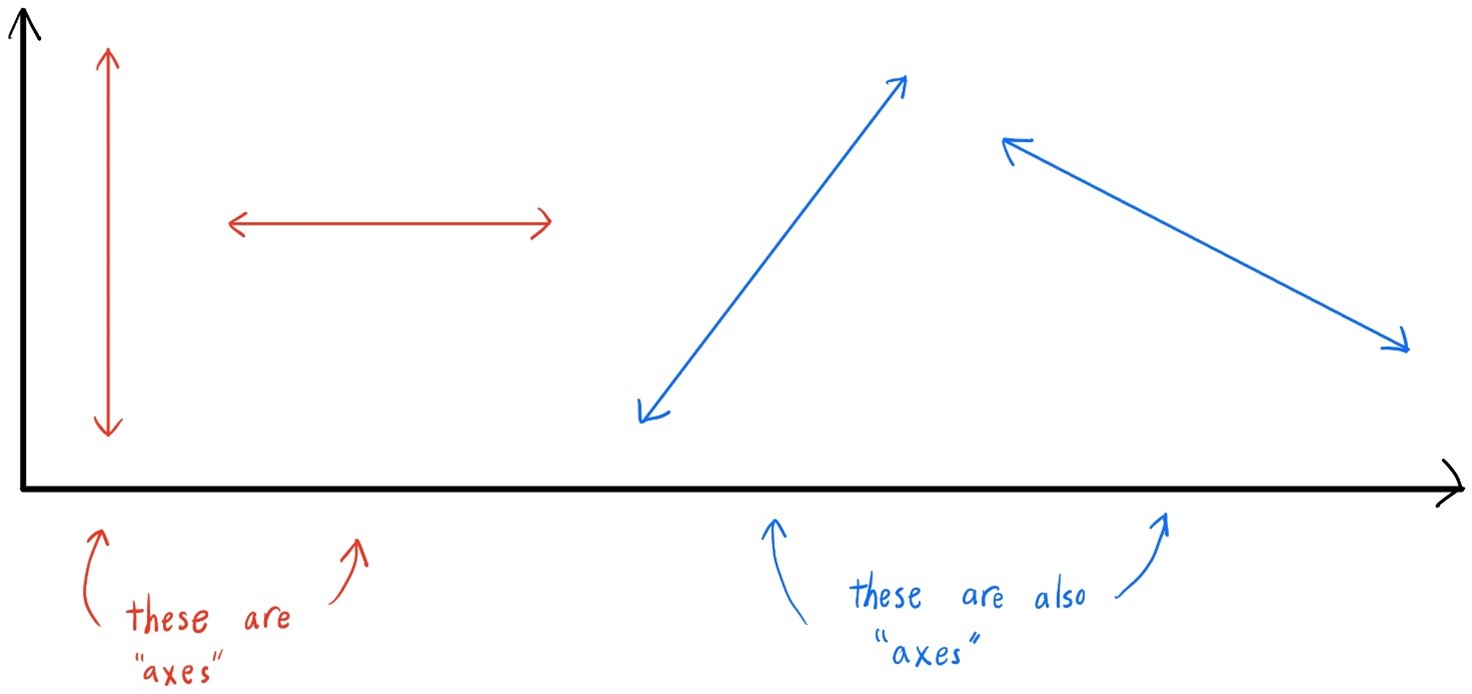

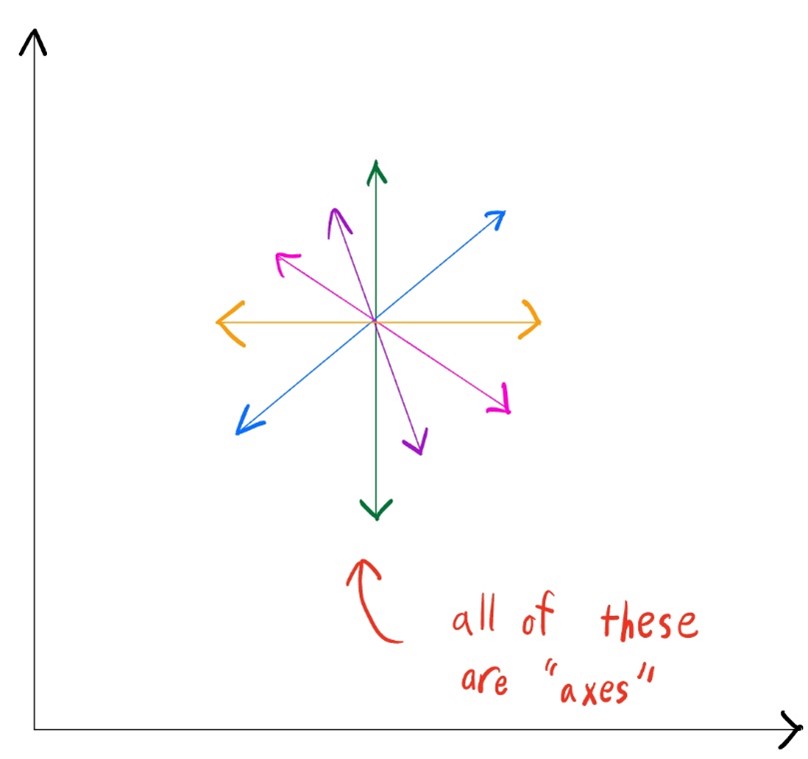

We refer to each straight line as an “axis”. But unlike the usual x-axis and y-axis that you may be used to, any straight line is considered an axis. SAT axes are not just limited to horizontal and vertical axes.

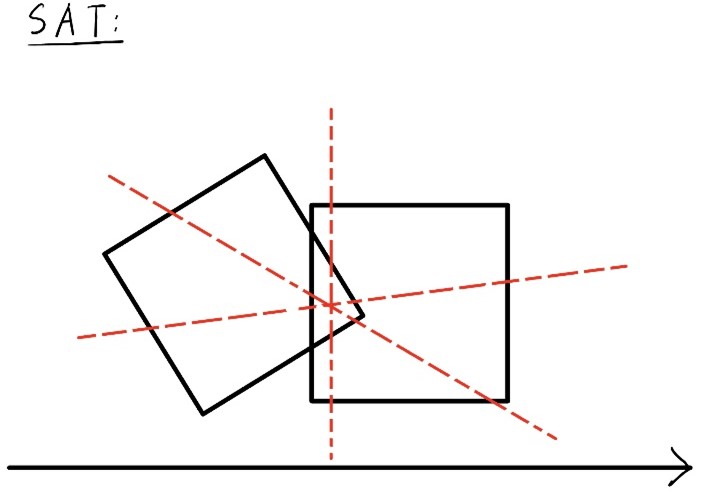

We quickly ran into an issue when writing code for this algorithm. If any straight line is considered an axis, then there are any infinite number of axes to test. This makes it impossible to compute collision detection through sheer brute force.

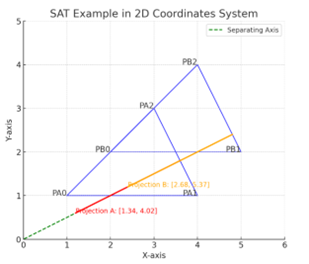

The beauty of SAT comes from its systematic methodology of determining which axes to test. Generally speaking, the SAT operates by projecting the vertices of the shapes onto potential separating axes, which are typically the normal vectors to the edges of the shapes. For each axis, the algorithm checks whether the projections of the two shapes overlap. If there exists at least one axis where the projections do not overlap, it indicates a separating axis, and the shapes are not colliding. Conversely, if the projections overlap on all tested axes, the shapes are intersecting. This method ensures precise collision detection by accounting for the actual geometry and orientation of the objects involved.

This example demonstrates the collision of convex objects in a 2D coordinate system. The green dashed line represents a potential separating axis, while the red and orange lines depict the projections of Polygons A and B onto this axis. As the projections overlap along the green line, a collision occurs between the two polygons.

Visualizing in Pygame

Created an object class

We created a self-defined class called ‘SimplifiedObjects’ (class) to represent a two-dimensional rectangular object with various functionalities for manipulating its position, rotation, and checking for collisions with other objects of the same type.

Attributes

This class stores essential attributes such as the vertices of the rectangle, its center, dimensions (width and length), the direction it is facing, the angle of rotation, and a flag indicating if it has collided with another object.

Methods

Getters and Setters: Allow us for easy access and modification on attributes, such as angle and coordinate positions.

Movements: Allow us to intuitively manipulate the moving directions.

Collision Detection: Apply the SAT to the class to check collisions.

Helper Methods: Calculate distances between points, updating the object's center, direction, and angle, and rotating its vertices.

Show_objects

We created another function called ‘show_objects’ to render a collection of objects onto a Pygame screen. Each object is represented by an image, which is scaled to fit within the screen dimensions while maintaining its aspect ratio. The function also handles rotation of the images based on the angle provided by each object. It detects and highlights collisions by overlaying a semi-transparent red bounding box on the colliding objects.

Demo

Basic Movements

In this part, we will demonstrate the basic movement of a car within the Pygame. This example will showcase the fundamental functionalities to users for simulating vehicular motion.

Scenarios

In this section, we aim to showcase various scenarios demonstrating car movements within Pygame, specifically focusing on collision and non-collision events. These scenarios will highlight the ability to accurately visualize and handle different collision dynamics, providing valuable insights into the behavior of vehicles in diverse situations.

Future Improvements

The next step in enhancing StudioViz involves transitioning from 2D to 3D visualization, incorporating more complex and realistic elements such as car models, trees, and pedestrians. This will provide a more immersive and accurate simulation of real-world scenarios. This comes with the challenge of creating collision detection for irregular shapes - making sure that objects know whether they are coming into contact with the ground, buildings, and trees. Eventually, we would also like to use machine learning to predict and prevent collisions based on historical data and real-time inputs.

References

"Separating Axis Theorem," Wikipedia, https://en.wikipedia.org/wiki/Hyperplane_separation_theorem.

Kah Shiu Chong, "Collision Detection Using the Separating Axis Theorem", envatotuts+, last modified August.6th 2012, https://code.tutsplus.com/collision-detection-using-the-separating-axis-theorem--gamedev-169t

OpenAI, ChatGPT, response to an question in Separating Axis Theorem example , June.19th 2024.